Methods

3.1 AGOX

AGOX means Atomistic Global Optimization X, where X refers to the various different methods (basin hopping, evolutionary algorithm, GOFEE, etc).

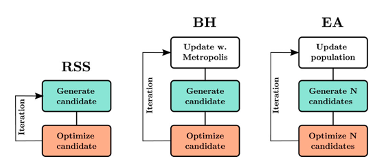

AGOX is a python package that facilitates the reuse of code-pieces in implementations of different optimization algorithms. Consider three common optimization algorithms, random structure search (RSS), basin hopping (BH), and evolutionary algorithms (EA). These methods share components such as creation of new candidates and local relaxation of new candidates:

An important element of AGOX is the assembly of calculated structure-energy data and the building of machine learned models based on these data. The models are:

Gaussian Process Regression (GPR) based on a global descriptor. Published by Christiansen et al.

Gaussian Process Regression (GPR) based on a local SOAP descriptor. Published by Rønne et al.

Neural network. Unpublished.

The AGOX code can be found on gitlab here and the documentation is found here. A paper is found in J Chem Phys or in its preprint version on arXiv.

Delete "Hammer Group"?

Block is found in multiple booklets!

Choose which booklet to go to: